The Future of Connectivity: How Multimodal Sensing and AI Will Drive Joint Communication and Sensing Beyond 6G

The future of wireless networks is set to go far beyond just transmitting data at a high rate. These networks will not only exchange information – they will sense and model their environment in real-time – in a paradigm that has been called Joint Communication and Sensing (JC&S).

Imagine a world where your devices—routers, phones, cars, or smart appliances—don’t just communicate with each other but also understand their surroundings and adapt their behaviour on the fly.

As autonomous systems, smart cities, and industrial automation are taking centre stage, JC&S systems will be critical for ensuring seamless operation in complex, ever-changing, dynamic environments. At the 6G Flagship, we are reimagining the possibilities of JC&S. Our vision goes beyond the current developments of these systems: We propose devices that not only sense and communicate but also model the world and learn from it continuously.

But how will these devices acquire this level of intelligence? The answer lies in combining multimodal sensing with AI-driven simulations, creating a synergy that redefines what networks and devices can achieve.

Moving beyond traditional JC&S: The power of multimodal sensing

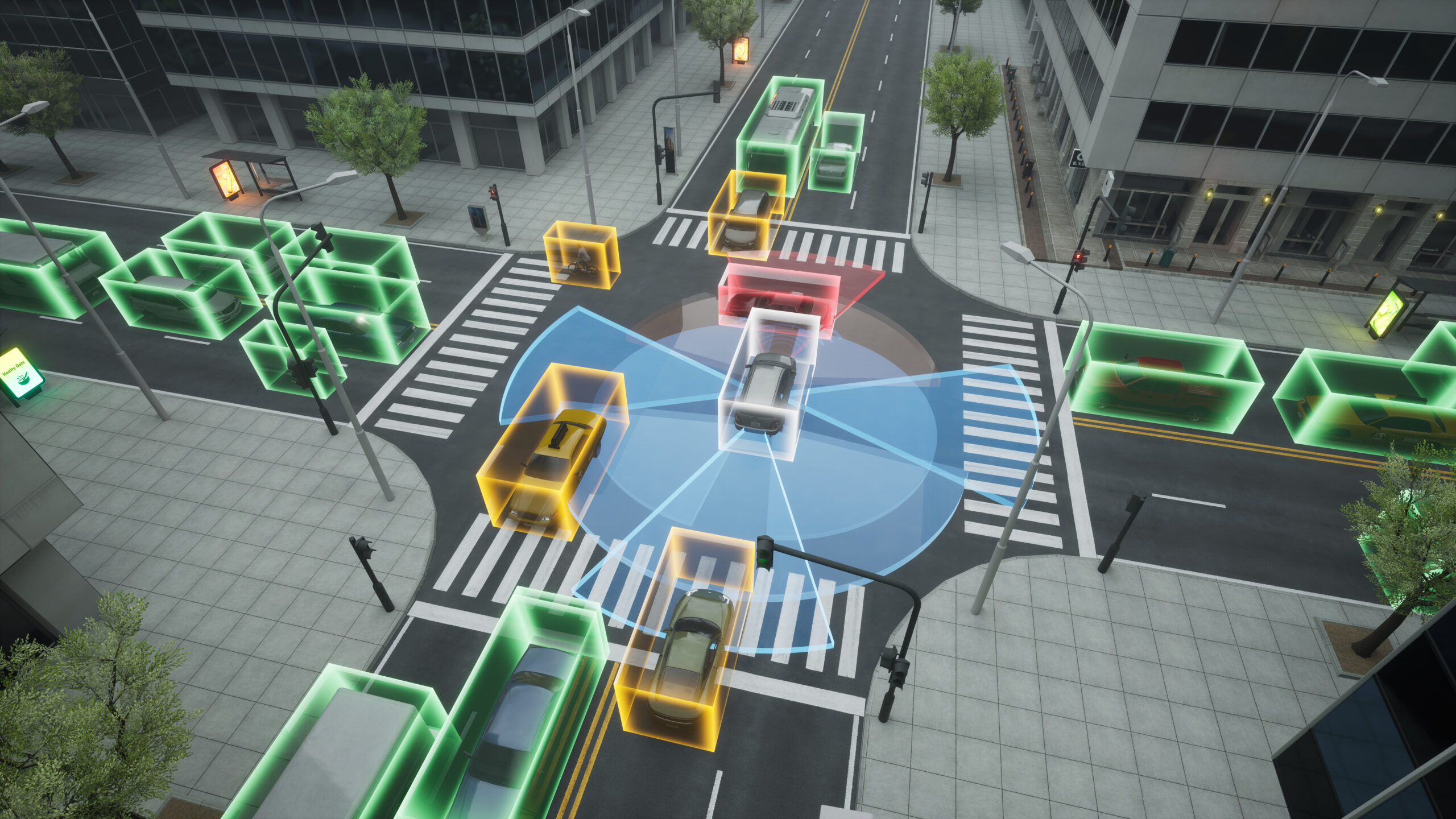

The current approach to JC&S mainly involves radio frequency (RF) data processing. This won’t be enough for the future. As networks become more complex, JC&S systems will need to rely on multimodal sensing that combines data from multiple sensors, such as cameras, RF transceivers or LiDAR, and even the lighting infrastructure. This richer array of inputs allows devices to gain a more complete understanding of their surroundings, improving their ability to sense and communicate in real time.

However, our approach takes it a step further. We envision JC&S devices that don’t just respond to the environment—they model it. For example, while traditional methods focus on real-time decisions based on incoming data, future devices will use five-dimensional (5D) models of the world around them. These models incorporate all current spatial, temporal, and spectral data, but at the same time, they allow the devices to simulate various scenarios and outcomes. This will significantly enhance their ability to predict and adapt to changes in the environment.

AI-driven simulations: The future of adaptation and learning

The key component of this future JC&S vision is the role of AI-in-the-loop simulations. Devices will no longer just sense their environment and make decisions; they will continuously learn and adapt through large-scale simulations powered by AI. These simulations generate synthetic data that allow devices to refine their models and behaviours, even before they encounter real-world scenarios.

As a result, the devices’ policies—the rules governing how they sense and communicate—will evolve dynamically. Through real-time AI-driven simulations, new policies will be generated based on data from previous experiences or synthetic environments. Devices can download these updated policies and adapt their behaviour accordingly. This creates an adaptive system where the devices not only sense their environment but also model it, and they continuously learn how to sense and communicate better.

This is a major leap from current JC&S approaches, where devices mostly rely on pre-programmed or static rules. In this future, the devices are continuously evolving and responding to the complexities of their environment, making decisions that optimize both communication and sensing tasks.

The future of JC&S: Evolving beyond communication

Accurate simulations can greatly improve efficient data transmission, but it is not just about that; it’s about learning from the environment and creating a dynamic dialogue between devices and their surroundings. The multimodal nature of future networks means that devices will rely on data from multiple sources—visual information, RF signals, environmental data, and more. This fusion will allow for a deeper understanding of the environment beyond what is visible to us humans and at an unprecedented scale.

For instance, in the context of autonomous vehicles, multimodal JC&S will allow cars to not only “see” their surroundings using cameras and LiDAR but also predict how those surroundings might change, even before it does. Cars will receive updated policies for communication and sensing, and will allow them to make real-time decisions about how to adjust their speed, positioning, and route based on environmental factors like weather, traffic, or obstacles detected beyond the line of sight.

Similarly, in smart cities, JC&S systems will monitor infrastructure, control traffic, and optimize the use of resources in real time. As these systems learn and adapt, they will provide cities with the ability to respond to changing conditions with far greater agility.

The challenges ahead: Data, complexity, and synchronization

As groundbreaking as this future may be, there are still significant challenges that must be addressed:

First, the integration of AI, multimodal sensing, and communication requires massive amounts of data—data that needs to be collected, processed, and annotated on a scale we’ve never seen before. Automation will be essential here, as manually processing this ocean of information is close to impossible.

Second, the computational complexity of managing multimodal data and running sophisticated AI models in real time will require advancements in both algorithms and hardware. Devices will need to balance real-time sensing tasks, communication, and computationally intensive learning routines, switching between these functions depending on environmental demands.

Finally, one major hurdle is synchronization between different sensing modalities. For multimodal data to be effective, devices must accurately time-stamp and calibrate the input from cameras, RF sensors, and other data sources. Without proper synchronization, the fusion of these data types will be prone to error, which could diminish the effectiveness of real-time decisions.

Looking forward: A smarter, more adaptive world

The future of JC&S promises to be a radical shift in how devices sense, communicate, and interact with the world around them. By combining multimodal sensing with AI-driven modelling and adaptive policies, we’re heading toward a future where networks are fast and reliable but also intelligent and flexible. Devices will continuously evolve, learning from their environment and improving their performance over time.

As 6G and beyond take shape, this vision of JC&S will be a key component of next-generation connectivity, driving innovations across users, industries and organizations.

The road ahead is not without challenges, but with the right technologies in place, we can expect JC&S systems to play a central role in shaping the future of communication and sensing. We at the 6G Flagship at the University of Oulu are already working towards it.

About the author

Strategic Research Area Leader

Miguel Bordallo López

View bio